“Disclosures and choice of consumers around Privily’s pairings are rid of badly”, says well advertiser, the Director of America Council Consumption of America Council. “I will only use it for the surface level, the things that have no do with your personal information, desire, the fear, or something you have not transmitted on the Internet.”

Custom Privacy issues AI

A virtual assistant who can anticipate your needs – or even be your therapist-is the serious of the ai. And meta is not the only company that pushes deeper in personal data in hunt for it.

Google added an option for Gemini that allows you to choose your Google search story to personalize their answers. Chatgpt added the ability to remember the details through the conversations.

But was of personalized you also brings new privacy complications: How are you doing what we want to know that bots, and how do our information use? “Just because these instruments don’t mean your friend doesn’t mean they are,” tell me, miranda bogen, a democraccio / technologies that the offer of ai.

During a conversation with meta Ai, bottles mentioned to the child. Then she says, the chatbot decided it is to be relatives. SYSTEMS AI, already notified for stereotypes, may drive to new types of bias when customized. “May I start the people of fleeing people you may not know and are based on the information that are not commenting at this price that basis.” She says.

And only imagine the black mirror “when you are starting to inserts you, or why is it plenty of, but pocket, but suggestive, but you thinks, but suggestions

Open says you don’t share the chatgpt content with third parts for marketing purposes. (The Post Washington has a partnership with Opena.) Google says conversations are not currently not used to show ads and company communicates “a change to users.

“The idea of an agent is working on my name – not to manipulate to the other” “says Justin Director. Personalized Advertisement feeded by ai” is inherently opponent “, it says.

Taking control of your data

There are three things that make meta you feel like a great change for our privacy.

First, Meta AI is Hooked on Facebook and Instagram. If you set up a profile for the app using your existing social account, you have access to a mountain of information collected from that social app. All they form the responses the meta a bot you give you, even if there is facebook ideas or in instagram on you is the way out of base.

Whatever the chatting with meta ai, Just Picture Zuckerberg is watching.

If you don’t want all the combined data, you’ll have to set up an account you separate AI.

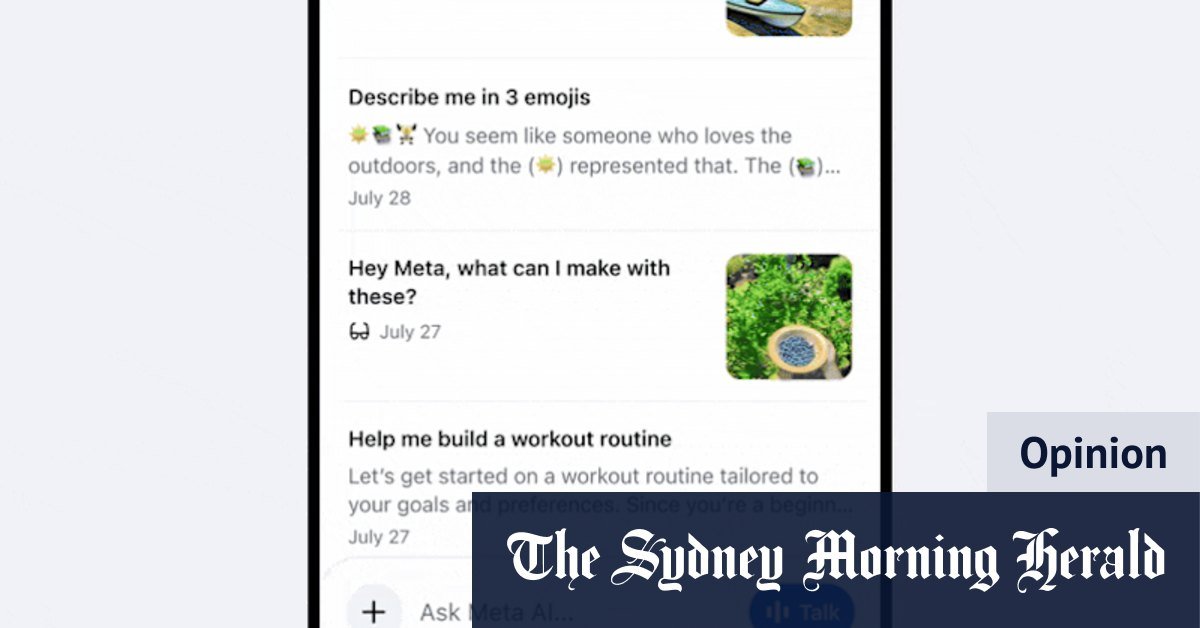

Second, Meta ai you remember everything by default. Keeping a transcription or voicemail that you have with her, together with a list of what you are committed to you – as mentioned you “- in a separate memory file. You can see their memories of you in the app settings.

But what is something you don’t want you to remember? It is understood: there is a lot of things – as my test involving fertility techniques and evasion fisting – people know only google privately.

Meta says it is not to add sensitive topics, but my tests found what it is worse what is the meat I don’t give you the ability to stop you from save chats or memories. (The nearest you can get is to use the site you’re meta login.) Nor makes meta you offer a “temporary” mode, who keeps a conversation out of your story.

The app the Meta let you clear the chats and the content of memory files after the fact. But when I tried to delete my memories, app warned that they were not going on everything. The original chat containing information in my story and could also be used. So then I had to dig in my chat story and find that original chat, and erase it too. (The nuclear option was to touch a tax in teaching to delete alone everything.

And there is also a thinner privacy concern, too. The contents of your chats – your words, photo and also voice – end being felt in meta systems. Charting allows you to opt out of training by jumping a marked establishment “improve the model for all.” Meta ai not offering an option.

Upload

Why couldn’t you contribute to meta training data? Many artists and writers took the issuance with their work used to train the ai without compensation or acknowledgment.

Some privacy gifts say we should be concerned, that systems ai can “escape” training data in future chats. It’s a reason that many corporations closed their employees to use only ai systems that do not use their data for training.

Meta says Trains and heard ati models to limit the possibility of the private information from the appearance as to the other people can describe.

However, meta has a warning in his / her service terms: “Don’t share information you don’t want the AIS to use and keep.”

I lay off his word.

The post of Washington

Get News and Review on Technology, Gadgets and Gaming in Our Technology Newsletter every Friday. I am Sign up here. I am